Uploading large files via HTTP can be a challenge.

It can result in timeouts and frustrated users.

Additionally, large uploads may not be possible if your hosting provider imposes HTTP file upload size restrictions (most do).

The solution is “chunking”.

Chunking involves breaking a single large file upload into multiple smaller files and uploading the smaller files to the server. The chunks are stitched back together to recreate the large file.

We’ll need to create some JavaScript to break the file into chunks and then upload each chunk one by one.

We’ll start with the HTML form:

<html>

<head>

<title>Upload A Large File</title>

</head>

<body>

<form action="path/to/upload/page" id="form">

<input type="file" name="file" id="file"/>

<input type="button" value="Upload" id="submit"/>

<input type="button" value="Cancel" id="cancel"/>

</form>

</body>

<-- JS Here -->

</html>

Now add the JavaScript.

Get references to our HTML buttons:

// Buttons

const submitButton = document.getElementById("submit");

const cancelButton = document.getElementById("cancel");

Setup the tracking variables:

// Array to store the chunks of the file let chunks = []; // Timeout to start the upload let uploadTimeout; // Boolean to check if a chunk is currently being uploaded let uploading = false; // Maximum number of retries for a chunk const maxRetries = 3; // Create an AbortController to cancel the fetch request let controller;

Create some utility functions:

// Function to cancel the upload and abort the fetch requests

const cancelUpload = () => {

clearUpload();

if (controller)

controller.abort();

enableUpload();

};

// Function to clear/reset the upload varaibles

const clearUpload = () => {

clearTimeout(uploadTimeout);

uploading = false;

chunks = [];

};

// Function to enable the submit button

const enableUpload = () => {

submitButton.removeAttribute("disabled");

submitButton.setAttribute("value","Upload");

};

// Function to enable the submit button

const disableUpload = () => {

submitButton.setAttribute("disabled","disabled");

submitButton.setAttribute("value","Uploading...");

};

Add the cancel button event listener:

// Event listener for the cancel button

cancelButton.addEventListener("click",(e) => {

cancelUpload();

});

Add the submit button event listener:

// Event listener for the submit button

submitButton.addEventListener("click",(e) => {

const fileInput = document.getElementById("file");

if (fileInput.files.length) {

disableUpload();

const file = fileInput.files[0];

// Break the file into 1MB chunks

const chunkSize = 1024 * 1024;

const totalChunks = Math.ceil(file.size / chunkSize);

// Setup chunk array with starting byte and endbyte that will be sliced from the file and uploaded

let startByte = 0;

for (var i = 1; i <= totalChunks; i++) {

let endByte = Math.min(startByte + chunkSize,file.size);

// [chunk number, start byte, end byte, uploaded, retry count, error, promise]

chunks.push([i,startByte, Math.min(startByte + chunkSize,file.size), null, 0, null, null]);

startByte = endByte;

}

// Begin uploading the chunks one after the other

uploadTimeout = setInterval(() => {

if (!uploading) {

uploading = true; // Prevent the next interval from starting until the current chunk is uploaded

// Upload the first chunk that hasn't already been uploaded

for (var i = 0; i < chunks.length; i++) {

if (!chunks[i][3] && chunks[i][4] < maxRetries) {

// Get the binary chunk (start byte / end byte)

const chunk = file.slice(chunks[i][1],chunks[i][2]);

const formData = new FormData();

formData.append("chunk",chunk);

formData.append("chunknumber",chunks[i][0]);

formData.append("totalchunks",totalChunks);

formData.append("filename",file.name);

// Create an AbortController to cancel the fetch request

// Need a new one for each fetch request

controller = new AbortController();

fetch(document.getElementById("form").getAttribute("action"), {

method: "POST",

body: formData,

signal: controller.signal

}).then(response => {

if (response.status == 413) {

cancelUpload();

alert("The chunk is too large. Try a smaller chunk size.");

} else {

response.json().then(data => {

if (data && data.success) {

// Uploaded successfully

chunks[i][3] = true; // Mark the chunk as uploaded

uploading = false; // Allow the next chunk to be uploaded

if (chunks[i][0] == totalChunks) {

// Last chunk uploaded successfully

clearUpload();

enableUpload();

alert("File uploaded successfully");

}

} else {

// Error uplooading

cancelUpload();

alert("Error uploading chunk. Check the browser debug window for details.");

console.error("Unhandled error uploading chunk");

}

}).catch(error => {

// Error uploading

cancelUpload();

alert("Error uploading chunk. Check the browser debug window for details.");

console.error("Error:",error);

});

}

}).catch(error => {

// Error uploading

chunks[i][4]++; // Increment the retry count

chunks[i][5] = error; // Store the error

uploading = false; // Allow retrying this chunk

console.error("Error:",error); // Log the error

});

break;

}

}

}

},1000);

} else {

alert("No file selected.");

}

});

In the code above we’re storing the start and end bytes of the file chunks in an array that we can loop through. We set our loop to run on an interval in the background so we’re not blocking the browser (we could use Web Workers here, but I’m not very familiar with them). During each interval we check to make sure we’re not uploading a chunk, then we find the next chunk that has not been uploaded. We slice the start and end bytes of the current chunk from the file and upload it.

Now we need to create the ColdFusion server-side code to accept the binary chunks and then stitch them all together into a complete file.

<cfscript>

if (compareNoCase(cgi.request_method, "post") eq 0) {

/*

ColdFusion will take the binary data supplied in the form.chunk variable

and write it to a temporary file on the server and then provide the path to that

temp file in the form.chunk variable.

*/

binaryChunk = fileReadBinary(form.chunk);

/*

Make sure to cleanup the temporary file because we are still ultimately going to be uploading a

large file to the server. We don't want the server drive that holds the ColdFusion temp directory

to fill up

*/

fileDelete(form.chunk);

uploadedFile = expandPath("path/to/uploads/#form.filename#");

if (form.chunknumber eq 1 AND fileExists(uploadedFile))

fileDelete(uploadedFile); // Overwrite the file if it already exists

// Now we can append the binary data to the file we are uploading to the server

uploadFile = fileOpen(uploadedFile,"append");

// Write the binary data to the file

fileWrite(uploadFile,binaryChunk);

// Close the file

fileClose(uploadFile);

}

</cfscript>

<cfcontent type="application/json" reset="true"><cfoutput>#serializeJSON({"success":true})#</cfoutput><

On the server-side ColdFusion will accept the binary chunk provided in the form variable and write it to a temporary file in the ColdFusion temp directory. It’s important to be aware of this because we can quickly fill up the servers drive with thousands of 1MG binary files. The code above reads the temporary file then deletes it. The binary data is then appended to the uploaded file.

We return a JSON response with a success flag so the client-side JavaScript will upload the next chunk.

That’s it. Pretty simple.

It’s possible to expand on this example and provide things like progress and status to further enhance the user experience.

Click here to download the source files.

If you’ve ever needed to use ColdFusion to manipulate Word documents, you might have tried a 3rd party library like Doc4J or Apache POI.

While these libraries are very robust I found them to be limiting in different ways. Apache POI lacks built-in mail merge capability (which to me seems very odd given the information below) and Doc4j threw an odd Jakarta error that I was not able to fix.

I also looked at paid for libraries like Apose, but the licensing costs were just too prohibitive

Finally I decided on direct OOXML manipulation.

This turned out to be much easier than I anticipated, once I learned that Office files like Word docx files are actually Zip archives!

Who knew!?!?!

So it’s possible to use the native cfzip tag to “open” the file.

<cfzip action="unzip" file="{Your Office Document File Path}" destination="{A temporary folder}" recurse="yes"/>

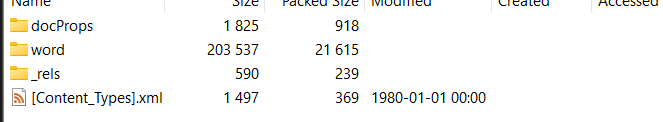

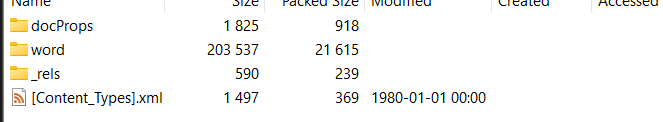

Once unzipped to a folder you will have a directory structure like this:

Within the Word subfolder there are several XML files. The document I am manipulating for the mail merge is document.xml

This file can be read into ColdFusion using xmlParse() and from there can be manipulated like any other XML object using ColdFusion’s native tags and commands.

I couldn’t find any “standard” way for altering the XML to merge data into the various template fields.

To get an idea of how Word does it I created a test Word document with a simple and complex mail merge field: Word Merge Fields.docx

Running this through a Word mail merge and then unzipping the resulting file and reviewing the document.xml for the “merged” document I found that simple merge fields (fldSimple) are completely replaced with the merged value while complex fields have a begin and end XML node delimiter that must be parsed and manipulated in specific ways.

In order to properly merge complex fields it’s necessary to determine if there is a separator XML node within the field. If so, the the node between the separator node and the end node are used as the value. If no separator node is found then the entire field is replaced with the merged value just as simple merge fields.

After updating the parsed XML it must be written back to the document.xml file:

<cffile action="write" file="{Path to document.xml}" output="#toString(CF XML object)#" charset="utf-8">

Once the document.xml file has been saved we need to rezip everything back into a Word docx file:

<cfzip action="zip" file="{Full path to resulting docx file}" source="{Folder containing unzipped contents}" recurse="yes"/>

Fully merged document:

Word Merge Fields – Merged.docx

So far I’ve only used this for mail merges, but I’m sure that is only scratching the surface.

There seems to be a potential bug in ColdFusion 2021 Query of Queries.

Today I ran into this error:

Index 5 out of bounds for length 5 null

The index bounds will change based on the number of columns in the query as explained below.

This error was being thrown in a very basic QoQ:

<cfquery name="qUser" dbtype="query"> SELECT * FROM qUsers WHERE User_ID = <cfqueryparam value="#user_id#" cfsqltype="cf_sql_numeric"> </cfquery>

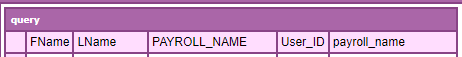

While Investigating the source for the original qUsers query I noticed two columns with the same name:

Looking a little deeper I found that the original qUsers query was being ordered via a QoQ prior to the QoQ throwing the error:

<cfquery name="qUsers" dbtype="query"> SELECT * FROM qUsers ORDER BY payroll_name </cfquery>

So essentially a query of a query of a query.

The problem appears to be that ColdFusion is case-sensitive and for some reason adds the ORDER BY column to the query resulting in a query that has two columns with the same name.

The solution was to change the ordering QoQ to match the case of the original qUsers query:

<cfquery name="qUsers" dbtype="query"> SELECT * FROM qUsers ORDER BY PAYROLL_NAME </cfquery>

I recently installed Nodeclipse for developing Node.js. Nice little editor with some code hinting and quick Node run command.

Due to my development requirements (and laziness) I wanted to work with the remote JS files using FTP. Nodeclipse does not support FTP out of the box. Enter “Remote System Explorer” for Eclipse.

The plugin installed easily. I entered my connection details. The console started showing my connection status and directory listings. However, attempting to explore the FTP site resulted in a generic “file system input or output error” and/or “java.net.SocketException”.

After some searching I found that the issue actually lies with the underlying Java VM used by Eclipse (Nodeclipse). I’d upgraded to JRE7 and there seems to be a bug in this release that causes an incompatibility with Eclipse.

I changed my shortcut to force Nodeclipse to use an install of JRE6 BIN folder.

eclipse.exe -vm "C:\Program Files\Java\jre6\bin"

NOTE: Ensure you are using the appropriate architecture (32-bit OR 64-bit) when matching JRE6 to Eclipse

Went digging around for ways to view the active connections in Microsoft SQL 2008. To my surprise Microsoft moved the Activity Monitor. Apparently they did this way back in SQL 2005. I skipped 2005 so I missed that. To view the open connection:

Simple as that!

An information technology professional with twenty five years experience in systems administration, computer programming, requirements gathering, customer service, and technical support.